Download Developing Solutions for Microsoft Azure.AZ-203.SelfTestEngine.2019-03-15.20q.vcex

| Vendor: | Microsoft |

| Exam Code: | AZ-203 |

| Exam Name: | Developing Solutions for Microsoft Azure |

| Date: | Mar 15, 2019 |

| File Size: | 6 MB |

How to open VCEX files?

Files with VCEX extension can be opened by ProfExam Simulator.

Discount: 20%

Demo Questions

Question 1

You have an Azure Batch project that processes and converts files and stores the files in Azure storage. You are developing a function to start the batch job.

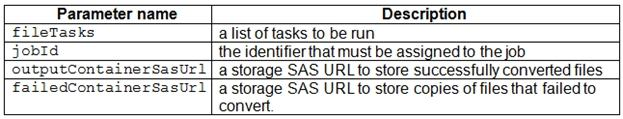

You add the following parameters to the function.

You must ensure that converted files are placed in the container referenced by the outputContainerSasUrl parameter. Files which fail to convert are places in the container referenced by the failedContainerSasUrl parameter.

You need to ensure the files are correctly processed.

How should you complete the code segment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Correct answer: To work with this question, an Exam Simulator is required.

Explanation:

Box 1: CreateJobBox 2: TaskSuccessTaskSuccess: Upload the file(s) only after the task process exits with an exit code of 0.Incorrect: TaskCompletion: Upload the file(s) after the task process exits, no matter what the exit code was.Box 3: TaskFailure TaskFailure:Upload the file(s) only after the task process exits with a nonzero exit code.Box 4: OutputFilesTo specify output files for a task, create a collection of OutputFile objects and assign it to the CloudTask.OutputFiles property when you create the task. References:https://docs.microsoft.com/en-us/dotnet/api/microsoft.azure.batch.protocol.models.outputfileuploadconditionhttps://docs.microsoft.com/en-us/azure/batch/batch-task-output-files Box 1: CreateJob

Box 2: TaskSuccess

TaskSuccess: Upload the file(s) only after the task process exits with an exit code of 0.

Incorrect: TaskCompletion: Upload the file(s) after the task process exits, no matter what the exit code was.

Box 3: TaskFailure

TaskFailure:Upload the file(s) only after the task process exits with a nonzero exit code.

Box 4: OutputFiles

To specify output files for a task, create a collection of OutputFile objects and assign it to the CloudTask.OutputFiles property when you create the task.

References:

https://docs.microsoft.com/en-us/dotnet/api/microsoft.azure.batch.protocol.models.outputfileuploadcondition

https://docs.microsoft.com/en-us/azure/batch/batch-task-output-files

Question 2

You are writing code to create and run an Azure Batch job.

You have created a pool of compute nodes.

You need to choose the right class and its method to submit a batch job to the Batch service.

Which method should you use?

- JobOperations.EnableJobAsync(String, IEnumerable<BatchClientBehavior>,CancellationToken)

- JobOperations.CreateJob()

- CloudJob.Enable(IEnumerable<BatchClientBehavior>)

- JobOperations.EnableJob(String,IEnumerable<BatchClientBehavior>)

- CloudJob.CommitAsync(IEnumerable<BatchClientBehavior>, CancellationToken)

Correct answer: E

Explanation:

A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool.The Commit method submits the job to the Batch service. Initially the job has no tasks.{ CloudJob job = batchClient.JobOperations.CreateJob(); job.Id = JobId; job.PoolInformation = new PoolInformation { PoolId = PoolId }; job.Commit();}...References:https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool.

The Commit method submits the job to the Batch service. Initially the job has no tasks.

{

CloudJob job = batchClient.JobOperations.CreateJob();

job.Id = JobId;

job.PoolInformation = new PoolInformation { PoolId = PoolId };

Question 3

You develop a website. You plan to host the website in Azure. You expect the website to experience high traffic volumes after it is published.

You must ensure that the website remains available and responsive while minimizing cost.

You need to deploy the website.

What should you do?

- Deploy the website to a virtual machine. Configure the virtual machine to automatically scale when the CPU load is high.

- Deploy the website to an App Service that uses the Shared service tier. Configure the App service plan to automatically scale when the CPU load is high.

- Deploy the website to an App Service that uses the Standard service tier. Configure the App service plan to automatically scale when the CPU load is high.

- Deploy the website to a virtual machine. Configure a Scale Set to increase the virtual machine instance count when the CPU load is high.

Correct answer: C

Explanation:

Windows Azure Web Sites (WAWS) offers 3 modes: Standard, Free, and Shared.Standard mode carries an enterprise-grade SLA (Service Level Agreement) of 99.9% monthly, even for sites with just one instance. Standard mode runs on dedicated instances, making it different from the other ways to buy Windows Azure Web Sites. Incorrect Answers:B: Shared and Free modes do not offer the scaling flexibility of Standard, and they have some important limits.Shared mode, just as the name states, also uses shared Compute resources, and also has a CPU limit. So, while neither Free nor Shared is likely to be the best choice for your production environment due to these limits. Windows Azure Web Sites (WAWS) offers 3 modes: Standard, Free, and Shared.

Standard mode carries an enterprise-grade SLA (Service Level Agreement) of 99.9% monthly, even for sites with just one instance.

Standard mode runs on dedicated instances, making it different from the other ways to buy Windows Azure Web Sites.

Incorrect Answers:

B: Shared and Free modes do not offer the scaling flexibility of Standard, and they have some important limits.

Shared mode, just as the name states, also uses shared Compute resources, and also has a CPU limit. So, while neither Free nor Shared is likely to be the best choice for your production environment due to these limits.

Question 4

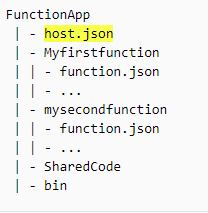

You develop a serverless application using several Azure Functions. These functions connect to data from within the code.

You want to configure tracing for an Azure Function App project.

You need to change configuration settings in the host.json file.

Which tool should you use?

- Visual Studio

- Azure portal

- Azure PowerShell

- Azure Functions Core Tools (Azure CLI)

Correct answer: B

Explanation:

The function editor built into the Azure portal lets you update the function.json file and the code file for a function. The host.json file, which contains some runtime-specific configurations, is in the root folder of the function app. References:https://docs.microsoft.com/en-us/azure/azure-functions/functions-reference#fileupdate The function editor built into the Azure portal lets you update the function.json file and the code file for a function. The host.json file, which contains some runtime-specific configurations, is in the root folder of the function app.

References:

https://docs.microsoft.com/en-us/azure/azure-functions/functions-reference#fileupdate

Question 5

You are developing a mobile instant messaging app for a company.

The mobile app must meet the following requirements:

- Support offline data sync.

- Update the latest messages during normal sync cycles.

You need to implement Offline Data Sync.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Retrieve records from Offline Data Sync on every call to the PullAsync method.

- Retrieve records from Offline Data Sync using an Incremental Sync.

- Push records to Offline Data Sync using an Incremental Sync.

- Return the updatedAt column from the Mobile Service Backend and implement sorting by using the column.

- Return the updatedAt column from the Mobile Service Backend and implement sorting by the message id.

Correct answer: BE

Explanation:

B: Incremental Sync: the first parameter to the pull operation is a query name that is used only on the client. If you use a non-null query name, the Azure Mobile SDK performs an incremental sync. Each time a pull operation returns a set of results, the latest updatedAt timestamp from that result set is stored in the SDK local system tables. Subsequent pull operations retrieve only records after that timestamp.E (not D): To use incremental sync, your server must return meaningful updatedAt values and must also support sorting by this field. However, since the SDK adds its own sort on the updatedAt field, you cannot use a pull query that has its own orderBy clause.References:https://docs.microsoft.com/en-us/azure/app-service-mobile/app-service-mobile-offline-data-sync B: Incremental Sync: the first parameter to the pull operation is a query name that is used only on the client. If you use a non-null query name, the Azure Mobile SDK performs an incremental sync. Each time a pull operation returns a set of results, the latest updatedAt timestamp from that result set is stored in the SDK local system tables. Subsequent pull operations retrieve only records after that timestamp.

E (not D): To use incremental sync, your server must return meaningful updatedAt values and must also support sorting by this field. However, since the SDK adds its own sort on the updatedAt field, you cannot use a pull query that has its own orderBy clause.

References:

https://docs.microsoft.com/en-us/azure/app-service-mobile/app-service-mobile-offline-data-sync

Question 6

You need to configure retries in the LoadUserDetails function in the Database class without impacting user experience.

What code should you insert on line DB07?

To answer, select the appropriate options in the answer area.

NOTE:Each correct selection is worth one point.

Correct answer: To work with this question, an Exam Simulator is required.

Explanation:

Box 1: PolicyRetryPolicy retry = Policy .Handle<HttpRequestException>() .Retry(3);The above example will create a retry policy which will retry up to three times if an action fails with an exception handled by the Policy.Box 2: WaitAndRetryAsync(3,i => TimeSpan.FromMilliseconds(100* Math.Pow(2,i-1)));A common retry strategy is exponential backoff: this allows for retries to be made initially quickly, but then at progressively longer intervals, to avoid hitting a subsystem with repeated frequent calls if the subsystem may be struggling.Example: Policy .Handle<SomeExceptionType>() .WaitAndRetry(3, retryAttempt => TimeSpan.FromSeconds(Math.Pow(2, retryAttempt)) );References:https://github.com/App-vNext/Polly/wiki/Retry Box 1: Policy

RetryPolicy retry = Policy

.Handle<HttpRequestException>()

.Retry(3);

The above example will create a retry policy which will retry up to three times if an action fails with an exception handled by the Policy.

Box 2: WaitAndRetryAsync(3,i => TimeSpan.FromMilliseconds(100* Math.Pow(2,i-1)));

A common retry strategy is exponential backoff: this allows for retries to be made initially quickly, but then at progressively longer intervals, to avoid hitting a subsystem with repeated frequent calls if the subsystem may be struggling.

Example:

Policy

.Handle<SomeExceptionType>()

.WaitAndRetry(3, retryAttempt =>

TimeSpan.FromSeconds(Math.Pow(2, retryAttempt))

);

Question 7

You use Azure Table storage to store customer information for an application. The data contains customer details and is partitioned by last name.

You need to create a query that returns all customers with the last name Smith.

Which code segment should you use?

- TableQuery.GenerateFilterCondition("PartitionKey", Equals, "Smith")

- TableQuery.GenerateFilterCondition("LastName", Equals, "Smith")

- TableQuery.GenerateFilterCondition("PartitionKey", QueryComparisons.Equal, "Smith")

- TableQuery.GenerateFilterCondition("LastName", QueryComparisons.Equal, "Smith")

Correct answer: C

Explanation:

Retrieve all entities in a partition. The following code example specifies a filter for entities where 'Smith' is the partition key. This example prints the fields of each entity in the query results to the console. Construct the query operation for all customer entities where PartitionKey="Smith". TableQuery<CustomerEntity> query = new TableQuery<CustomerEntity>().Where(TableQuery.GenerateFilterCondition("PartitionKey", QueryComparisons.Equal, "Smith")); References:https://docs.microsoft.com/en-us/azure/cosmos-db/table-storage-how-to-use-dotnet Retrieve all entities in a partition. The following code example specifies a filter for entities where 'Smith' is the partition key. This example prints the fields of each entity in the query results to the console.

Construct the query operation for all customer entities where PartitionKey="Smith".

TableQuery<CustomerEntity> query = new TableQuery<CustomerEntity>().Where(TableQuery.GenerateFilterCondition("PartitionKey", QueryComparisons.Equal, "Smith"));

References:

https://docs.microsoft.com/en-us/azure/cosmos-db/table-storage-how-to-use-dotnet

Question 8

You need to construct the link to the summary report for the email that is sent to users.

What should you do?

- Create a SharedAccessBlobPolicy and add it to the containers SharedAccessPolicies.Call GetSharedAccessSignature on the blob and use the resulting link.

- Create a SharedAccessAccountPolicy and call GetSharedAccessSignature on storage account and use the resulting link.

- Create a SharedAccessBlobPolicy and set the expiry time to two weeks from today.Call GetSharedAccessSignature on the blob and use the resulting link.

- Create a SharedAccessBlobPolicy and set the expiry time to two weeks from today.Call GetSharedAccessSignature on the container and use the resulting link.

Correct answer: D

Explanation:

Scenario: Processing is performed by an Azure Function that uses version 2 of the Azure Function runtime. Once processing is completed, results are stored in Azure Blob Storage and an Azure SQL database. Then, an email summary is sent to the user with a link to the processing report. The link to the report must remain valid if the email is forwarded to another user.Create a stored access policy to manage signatures on a container's resources, and then generate the shared access signature on the container, setting the constraints directly on the signature. Code example: Add a method that generates the shared access signature for the container and returns the signature URI.static string GetContainerSasUri(CloudBlobContainer container) { //Set the expiry time and permissions for the container. //In this case no start time is specified, so the shared access signature becomes valid immediately. SharedAccessBlobPolicy sasConstraints = new SharedAccessBlobPolicy(); sasConstraints.SharedAccessExpiryTime = DateTimeOffset.UtcNow.AddHours(24); sasConstraints.Permissions = SharedAccessBlobPermissions.List | SharedAccessBlobPermissions.Write; //Generate the shared access signature on the container, setting the constraints directly on the signature. string sasContainerToken = container.GetSharedAccessSignature(sasConstraints); //Return the URI string for the container, including the SAS token. return container.Uri + sasContainerToken; } Incorrect Answers:C: Call GetSharedAccessSignature on the container, not on the blob.References:https://docs.microsoft.com/en-us/azure/storage/blobs/storage-dotnet-shared-access-signature-part-2 Scenario: Processing is performed by an Azure Function that uses version 2 of the Azure Function runtime. Once processing is completed, results are stored in Azure Blob Storage and an Azure SQL database. Then, an email summary is sent to the user with a link to the processing report. The link to the report must remain valid if the email is forwarded to another user.

Create a stored access policy to manage signatures on a container's resources, and then generate the shared access signature on the container, setting the constraints directly on the signature.

Code example: Add a method that generates the shared access signature for the container and returns the signature URI.

static string GetContainerSasUri(CloudBlobContainer container)

{

//Set the expiry time and permissions for the container.

//In this case no start time is specified, so the shared access signature becomes valid immediately.

SharedAccessBlobPolicy sasConstraints = new SharedAccessBlobPolicy();

sasConstraints.SharedAccessExpiryTime = DateTimeOffset.UtcNow.AddHours(24);

sasConstraints.Permissions = SharedAccessBlobPermissions.List | SharedAccessBlobPermissions.Write;

//Generate the shared access signature on the container, setting the constraints directly on the signature.

string sasContainerToken = container.GetSharedAccessSignature(sasConstraints);

//Return the URI string for the container, including the SAS token.

return container.Uri + sasContainerToken;

}

Incorrect Answers:

C: Call GetSharedAccessSignature on the container, not on the blob.

References:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-dotnet-shared-access-signature-part-2

Question 9

You need to meet the LabelMaker application security requirement.

What should you do?

- Create a conditional access policy and assign it to the Azure Kubernetes Service cluster.

- Place the Azure Active Directory account into an Azure AD group. Create a ClusterRoleBinding and assign it to the group.

- Create a RoleBinding and assign it to the Azure AD account.

- Create a Microsoft Azure Active Directory service principal and assign it to the Azure Kubernetes Service (AKS) cluster.

Correct answer: B

Explanation:

Scenario: The LabelMaker applications must be secured by using an AAD account that has full access to all namespaces of the Azure Kubernetes Service (AKS) cluster.Permissions can be granted within a namespace with a RoleBinding, or cluster-wide with a ClusterRoleBinding. References:https://kubernetes.io/docs/reference/access-authn-authz/rbac/ Scenario: The LabelMaker applications must be secured by using an AAD account that has full access to all namespaces of the Azure Kubernetes Service (AKS) cluster.

Permissions can be granted within a namespace with a RoleBinding, or cluster-wide with a ClusterRoleBinding.

References:

https://kubernetes.io/docs/reference/access-authn-authz/rbac/

Question 10

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You need to ensure that the SecurityPin security requirements are met.

Solution: Enable Always Encrypted for the SecurityPin column using a certificate based on a trusted certificate authority. Update the Getting Started document with instructions to ensure that the certificate is installed on user machines.

Does the solution meet the goal?

- Yes

- No

Correct answer: B

Explanation:

Enable Always Encrypted is correct, but only the WebAppIdentity service principal should be given access to the certificate. Scenario: Users’ SecurityPin must be stored in such a way that access to the database does not allow the viewing of SecurityPins. The web application is the only system that should have access to SecurityPins. Enable Always Encrypted is correct, but only the WebAppIdentity service principal should be given access to the certificate.

Scenario: Users’ SecurityPin must be stored in such a way that access to the database does not allow the viewing of SecurityPins. The web application is the only system that should have access to SecurityPins.

HOW TO OPEN VCE FILES

Use VCE Exam Simulator to open VCE files

HOW TO OPEN VCEX AND EXAM FILES

Use ProfExam Simulator to open VCEX and EXAM files

ProfExam at a 20% markdown

You have the opportunity to purchase ProfExam at a 20% reduced price

Get Now!