Download Developing Solutions for Microsoft Azure.AZ-203.NewDumps.2020-01-23.64q.vcex

| Vendor: | Microsoft |

| Exam Code: | AZ-203 |

| Exam Name: | Developing Solutions for Microsoft Azure |

| Date: | Jan 23, 2020 |

| File Size: | 6 MB |

How to open VCEX files?

Files with VCEX extension can be opened by ProfExam Simulator.

Discount: 20%

Demo Questions

Question 1

You need to resolve a notification latency issue.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Set Always On to false.

- Set Always On to true.

- Ensure that the Azure Function is set to use a consumption plan.

- Ensure that the Azure Function is using an App Service plan.

Correct answer: BD

Explanation:

Azure Functions can run on either a Consumption Plan or a dedicated App Service Plan. If you run in a dedicated mode, you need to turn on the Always On setting for your Function App to run properly. The Function runtime will go idle after a few minutes of inactivity, so only HTTP triggers will actually "wake up" your functions. This is similar to how WebJobs must have Always On enabled. Scenario: Notification latency: Users report that anomaly detection emails can sometimes arrive several minutes after an anomaly is detected.Anomaly detection service: You have an anomaly detection service that analyzes log information for anomalies. It is implemented as an Azure Machine Learning model. The model is deployed as a web service.If an anomaly is detected, an Azure Function that emails administrators is called by using an HTTP WebHook. References:https://github.com/Azure/Azure-Functions/wiki/Enable-Always-On-when-running-on-dedicated-App-Service-Plan Azure Functions can run on either a Consumption Plan or a dedicated App Service Plan. If you run in a dedicated mode, you need to turn on the Always On setting for your Function App to run properly. The Function runtime will go idle after a few minutes of inactivity, so only HTTP triggers will actually "wake up" your functions. This is similar to how WebJobs must have Always On enabled.

Scenario: Notification latency: Users report that anomaly detection emails can sometimes arrive several minutes after an anomaly is detected.

Anomaly detection service: You have an anomaly detection service that analyzes log information for anomalies. It is implemented as an Azure Machine Learning model. The model is deployed as a web service.

If an anomaly is detected, an Azure Function that emails administrators is called by using an HTTP WebHook.

References:

https://github.com/Azure/Azure-Functions/wiki/Enable-Always-On-when-running-on-dedicated-App-Service-Plan

Question 2

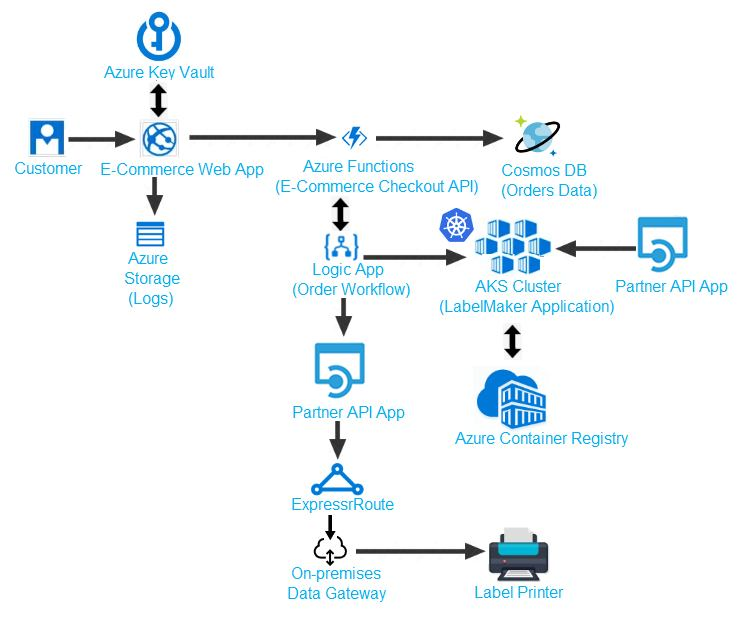

You need to support the requirements for the Shipping Logic App.

What should you use?

- Azure Active Directory Application Proxy

- Point-to-Site (P2S) VPN connection

- Site-to-Site (S2S) VPN connection

- On-premises Data Gateway

Correct answer: D

Explanation:

Before you can connect to on-premises data sources from Azure Logic Apps, download and install the on-premises data gateway on a local computer. The gateway works as a bridge that provides quick data transfer and encryption between data sources on premises (not in the cloud) and your logic apps. The gateway supports BizTalk Server 2016. Note: Microsoft have now fully incorporated the Azure BizTalk Services capabilities into Logic Apps and Azure App Service Hybrid Connections.Logic Apps Enterprise Integration pack bring some of the enterprise B2B capabilities like AS2 and X12, EDI standards support Scenario: The Shipping Logic app must meet the following requirements:Support the ocean transport and inland transport workflows by using a Logic App. Support industry standard protocol X12 message format for various messages including vessel content details and arrival notices. Secure resources to the corporate VNet and use dedicated storage resources with a fixed costing model. Maintain on-premises connectivity to support legacy applications and final BizTalk migrations. References:https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-gateway-install Before you can connect to on-premises data sources from Azure Logic Apps, download and install the on-premises data gateway on a local computer. The gateway works as a bridge that provides quick data transfer and encryption between data sources on premises (not in the cloud) and your logic apps.

The gateway supports BizTalk Server 2016.

Note: Microsoft have now fully incorporated the Azure BizTalk Services capabilities into Logic Apps and Azure App Service Hybrid Connections.

Logic Apps Enterprise Integration pack bring some of the enterprise B2B capabilities like AS2 and X12, EDI standards support

Scenario: The Shipping Logic app must meet the following requirements:

- Support the ocean transport and inland transport workflows by using a Logic App.

- Support industry standard protocol X12 message format for various messages including vessel content details and arrival notices.

- Secure resources to the corporate VNet and use dedicated storage resources with a fixed costing model.

- Maintain on-premises connectivity to support legacy applications and final BizTalk migrations.

References:

https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-gateway-install

Question 3

You are writing code to create and run an Azure Batch job.

You have created a pool of compute nodes.

You need to choose the right class and its method to submit a batch job to the Batch service.

Which method should you use?

- JobOperations.EnableJobAsync(String, IEnumerable<BatchClientBehavior>,CancellationToken)

- JobOperations.CreateJob()

- CloudJob.Enable(IEnumerable<BatchClientBehavior>)

- JobOperations.EnableJob(String,IEnumerable<BatchClientBehavior>)

- CloudJob.CommitAsync(IEnumerable<BatchClientBehavior>, CancellationToken)

Correct answer: E

Explanation:

A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool. The Commit method submits the job to the Batch service. Initially the job has no tasks. { CloudJob job = batchClient.JobOperations.CreateJob(); job.Id = JobId; job.PoolInformation = new PoolInformation { PoolId = PoolId }; job.Commit(); } ... References:https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool.

The Commit method submits the job to the Batch service. Initially the job has no tasks.

{

CloudJob job = batchClient.JobOperations.CreateJob();

job.Id = JobId;

job.PoolInformation = new PoolInformation { PoolId = PoolId };

job.Commit();

}

...

References:

https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet

Question 4

You are developing a software solution for an autonomous transportation system. The solution uses large data sets and Azure Batch processing to simulate navigation sets for entire fleets of vehicles.

You need to create compute nodes for the solution on Azure Batch.

What should you do?

- In the Azure portal, add a Job to a Batch account.

- In a .NET method, call the method: BatchClient.PoolOperations.CreateJob

- In Python, implement the class: JobAddParameter

- In Azure CLI, run the command: az batch pool create

- In a .NET method, call the method: BatchClient.PoolOperations.CreatePool

- In Python, implement the class: TaskAddParameter

- In the Azure CLI, run the command: az batch account create

Correct answer: B

Explanation:

A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool. Note: Step 1: Create a pool of compute nodes. When you create a pool, you specify the number of compute nodes for the pool, their size, and the operating system. When each task in your job runs, it's assigned to execute on one of the nodes in your pool.Step 2: Create a job. A job manages a collection of tasks. You associate each job to a specific pool where that job's tasks will run.Step 3: Add tasks to the job. Each task runs the application or script that you uploaded to process the data files it downloads from your Storage account. As each task completes, it can upload its output to Azure Storage.Incorrect Answers:C, F: To create a Batch pool in Python, the app uses the PoolAddParameter class to set the number of nodes, VM size, and a pool configuration.E: BatchClient.PoolOperations does not have a CreateJob method.References:https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnethttps://docs.microsoft.com/en-us/azure/batch/quick-run-python A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool.

Note:

Step 1: Create a pool of compute nodes. When you create a pool, you specify the number of compute nodes for the pool, their size, and the operating system. When each task in your job runs, it's assigned to execute on one of the nodes in your pool.

Step 2: Create a job. A job manages a collection of tasks. You associate each job to a specific pool where that job's tasks will run.

Step 3: Add tasks to the job. Each task runs the application or script that you uploaded to process the data files it downloads from your Storage account. As each task completes, it can upload its output to Azure Storage.

Incorrect Answers:

C, F: To create a Batch pool in Python, the app uses the PoolAddParameter class to set the number of nodes, VM size, and a pool configuration.

E: BatchClient.PoolOperations does not have a CreateJob method.

References:

https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet

https://docs.microsoft.com/en-us/azure/batch/quick-run-python

Question 5

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are developing a solution that will be deployed to an Azure Kubernetes Service (AKS) cluster. The solution will include a custom VNet, Azure Container Registry images, and an Azure Storage account.

The solution must allow dynamic creation and management of all Azure resources within the AKS cluster.

You need to configure an AKS cluster for use with the Azure APIs.

Solution: Enable the Azure Policy Add-on for Kubernetes to connect the Azure Policy service to the GateKeeper admission controller for the AKS cluster. Apply a built-in policy to the cluster.

Does the solution meet the goal?

- Yes

- No

Correct answer: B

Explanation:

Instead create an AKS cluster that supports network policy. Create and apply a network to allow traffic only from within a defined namespace References:https://docs.microsoft.com/en-us/azure/aks/use-network-policies Instead create an AKS cluster that supports network policy. Create and apply a network to allow traffic only from within a defined namespace

References:

https://docs.microsoft.com/en-us/azure/aks/use-network-policies

Question 6

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are developing a solution that will be deployed to an Azure Kubernetes Service (AKS) cluster. The solution will include a custom VNet, Azure Container Registry images, and an Azure Storage account.

The solution must allow dynamic creation and management of all Azure resources within the AKS cluster.

You need to configure an AKS cluster for use with the Azure APIs.

Solution: Create an AKS cluster that supports network policy. Create and apply a network to allow traffic only from within a defined namespace.

Does the solution meet the goal?

- Yes

- No

Correct answer: A

Explanation:

When you run modern, microservices-based applications in Kubernetes, you often want to control which components can communicate with each other. The principle of least privilege should be applied to how traffic can flow between pods in an Azure Kubernetes Service (AKS) cluster. Let's say you likely want to block traffic directly to back-end applications. The Network Policy feature in Kubernetes lets you define rules for ingress and egress traffic between pods in a cluster. References:https://docs.microsoft.com/en-us/azure/aks/use-network-policies When you run modern, microservices-based applications in Kubernetes, you often want to control which components can communicate with each other. The principle of least privilege should be applied to how traffic can flow between pods in an Azure Kubernetes Service (AKS) cluster. Let's say you likely want to block traffic directly to back-end applications. The Network Policy feature in Kubernetes lets you define rules for ingress and egress traffic between pods in a cluster.

References:

https://docs.microsoft.com/en-us/azure/aks/use-network-policies

Question 7

You need to implement the e-commerce checkout API.

Which three actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Set the function template’s Mode property to Webhook and the Webhook type property to Generic JSON.

- Create an Azure Function using the HTTP POST function template.

- In the Azure Function App, enable Cross-Origin Resource Sharing (CORS) with all origins permitted.

- In the Azure Function App, enable Managed Service Identity (MSI).

- Set the function template’s Mode property to Webhook and the Webhook type property to GitHub.

- Create an Azure Function using the Generic webhook function template.

Correct answer: ABD

Explanation:

Scenario: E-commerce application sign-ins must be secured by using Azure App Service authentication and Azure Active Directory (AAD).D: A managed identity from Azure Active Directory allows your app to easily access other AAD-protected resources such as Azure Key Vault. Incorrect Answers:C: CORS is an HTTP feature that enables a web application running under one domain to access resources in another domain.References:https://docs.microsoft.com/en-us/azure/app-service/overview-managed-identity Scenario: E-commerce application sign-ins must be secured by using Azure App Service authentication and Azure Active Directory (AAD).

D: A managed identity from Azure Active Directory allows your app to easily access other AAD-protected resources such as Azure Key Vault.

Incorrect Answers:

C: CORS is an HTTP feature that enables a web application running under one domain to access resources in another domain.

References:

https://docs.microsoft.com/en-us/azure/app-service/overview-managed-identity

Question 8

You need to provision and deploy the order workflow.

Which three components should you include? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Connections

- On-premises Data Gateway

- Workflow definition

- Resources

- Functions

Correct answer: BCE

Explanation:

Scenario: The order workflow fails to run upon initial deployment to Azure. Scenario: The order workflow fails to run upon initial deployment to Azure.

Question 9

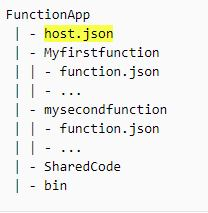

You develop a serverless application that includes Azure Functions by using Visual Studio. These functions connect to data from within the code. You deploy the functions to Azure.

You want to configure tracing for an Azure Function App project.

You need to change configuration settings in the host.json file.

Which tool should you use?

- Visual Studio

- Azure portal

- Azure PowerShell

- Azure Functions Core Tools (Azure CLI)

Correct answer: B

Explanation:

The function editor built into the Azure portal lets you update the function.json file and the code file for a function. The host.json file, which contains some runtime-specific configurations, is in the root folder of the function app. References:https://docs.microsoft.com/en-us/azure/azure-functions/functions-reference#fileupdate The function editor built into the Azure portal lets you update the function.json file and the code file for a function. The host.json file, which contains some runtime-specific configurations, is in the root folder of the function app.

References:

https://docs.microsoft.com/en-us/azure/azure-functions/functions-reference#fileupdate

Question 10

You are developing a mobile instant messaging app for a company.

The mobile app must meet the following requirements:

- Support offline data sync.

- Update the latest messages during normal sync cycles.

You need to implement Offline Data Sync.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Retrieve records from Offline Data Sync on every call to the PullAsync method.

- Retrieve records from Offline Data Sync using an Incremental Sync.

- Push records to Offline Data Sync using an Incremental Sync.

- Return the updatedAt column from the Mobile Service Backend and implement sorting by using the column.

- Return the updatedAt column from the Mobile Service Backend and implement sorting by the message id.

Correct answer: BE

Explanation:

B: Incremental Sync: the first parameter to the pull operation is a query name that is used only on the client. If you use a non-null query name, the Azure Mobile SDK performs an incremental sync. Each time a pull operation returns a set of results, the latest updatedAt timestamp from that result set is stored in the SDK local system tables. Subsequent pull operations retrieve only records after that timestamp.E (not D): To use incremental sync, your server must return meaningful updatedAt values and must also support sorting by this field. However, since the SDK adds its own sort on the updatedAt field, you cannot use a pull query that has its own orderBy clause.References:https://docs.microsoft.com/en-us/azure/app-service-mobile/app-service-mobile-offline-data-sync B: Incremental Sync: the first parameter to the pull operation is a query name that is used only on the client. If you use a non-null query name, the Azure Mobile SDK performs an incremental sync. Each time a pull operation returns a set of results, the latest updatedAt timestamp from that result set is stored in the SDK local system tables. Subsequent pull operations retrieve only records after that timestamp.

E (not D): To use incremental sync, your server must return meaningful updatedAt values and must also support sorting by this field. However, since the SDK adds its own sort on the updatedAt field, you cannot use a pull query that has its own orderBy clause.

References:

https://docs.microsoft.com/en-us/azure/app-service-mobile/app-service-mobile-offline-data-sync

HOW TO OPEN VCE FILES

Use VCE Exam Simulator to open VCE files

HOW TO OPEN VCEX AND EXAM FILES

Use ProfExam Simulator to open VCEX and EXAM files

ProfExam at a 20% markdown

You have the opportunity to purchase ProfExam at a 20% reduced price

Get Now!