Download Developing Solutions for Microsoft Azure.AZ-203.CertKiller.2019-06-14.52q.vcex

| Vendor: | Microsoft |

| Exam Code: | AZ-203 |

| Exam Name: | Developing Solutions for Microsoft Azure |

| Date: | Jun 14, 2019 |

| File Size: | 5 MB |

How to open VCEX files?

Files with VCEX extension can be opened by ProfExam Simulator.

Discount: 20%

Demo Questions

Question 1

You need to resolve a notification latency issue.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Set Always On to false.

- Set Always On to true.

- Ensure that the Azure Function is set to use a consumption plan.

- Ensure that the Azure Function is using an App Service plan.

Correct answer: BD

Explanation:

Azure Functions can run on either a Consumption Plan or a dedicated App Service Plan. If you run in a dedicated mode, you need to turn on the Always On setting for your Function App to run properly. The Function runtime will go idle after a few minutes of inactivity, so only HTTP triggers will actually "wake up" your functions. This is similar to how WebJobs must have Always On enabled. Scenario: Notification latency: Users report that anomaly detection emails can sometimes arrive several minutes after an anomaly is detected.Anomaly detection service: You have an anomaly detection service that analyzes log information for anomalies. It is implemented as an Azure Machine Learning model. The model is deployed as a web service.If an anomaly is detected, an Azure Function that emails administrators is called by using an HTTP WebHook. References:https://github.com/Azure/Azure-Functions/wiki/Enable-Always-On-when-running-on-dedicated-App-Service-Plan Azure Functions can run on either a Consumption Plan or a dedicated App Service Plan. If you run in a dedicated mode, you need to turn on the Always On setting for your Function App to run properly. The Function runtime will go idle after a few minutes of inactivity, so only HTTP triggers will actually "wake up" your functions. This is similar to how WebJobs must have Always On enabled.

Scenario: Notification latency: Users report that anomaly detection emails can sometimes arrive several minutes after an anomaly is detected.

Anomaly detection service: You have an anomaly detection service that analyzes log information for anomalies. It is implemented as an Azure Machine Learning model. The model is deployed as a web service.

If an anomaly is detected, an Azure Function that emails administrators is called by using an HTTP WebHook.

References:

https://github.com/Azure/Azure-Functions/wiki/Enable-Always-On-when-running-on-dedicated-App-Service-Plan

Question 2

You are writing code to create and run an Azure Batch job.

You have created a pool of compute nodes.

You need to choose the right class and its method to submit a batch job to the Batch service.

Which method should you use?

- JobOperations.EnableJobAsync(String, IEnumerable<BatchClientBehavior>,CancellationToken)

- JobOperations.CreateJob()

- CloudJob.Enable(IEnumerable<BatchClientBehavior>)

- JobOperations.EnableJob(String,IEnumerable<BatchClientBehavior>)

- CloudJob.CommitAsync(IEnumerable<BatchClientBehavior>, CancellationToken)

Correct answer: E

Explanation:

A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool. The Commit method submits the job to the Batch service. Initially the job has no tasks. { CloudJob job = batchClient.JobOperations.CreateJob(); job.Id = JobId; job.PoolInformation = new PoolInformation { PoolId = PoolId }; job.Commit(); } ... References:https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool.

The Commit method submits the job to the Batch service. Initially the job has no tasks.

{

CloudJob job = batchClient.JobOperations.CreateJob();

job.Id = JobId;

job.PoolInformation = new PoolInformation { PoolId = PoolId };

job.Commit();

}

...

References:

https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet

Question 3

You are developing a software solution for an autonomous transportation system. The solution uses large data sets and Azure Batch processing to simulate navigation sets for entire fleets of vehicles.

You need to create compute nodes for the solution on Azure Batch.

What should you do?

- In the Azure portal, add a Job to a Batch account.

- In a .NET method, call the method: BatchClient.PoolOperations.CreateJob

- In Python, implement the class: JobAddParameter

- In Azure CLI, run the command: az batch pool create

Correct answer: B

Explanation:

A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool. Note: Step 1: Create a pool of compute nodes. When you create a pool, you specify the number of compute nodes for the pool, their size, and the operating system. When each task in your job runs, it's assigned to execute on one of the nodes in your pool.Step 2 : Create a job. A job manages a collection of tasks. You associate each job to a specific pool where that job's tasks will run.Step 3: Add tasks to the job. Each task runs the application or script that you uploaded to process the data files it downloads from your Storage account. As each task completes, it can upload its output to Azure Storage.Incorrect Answers:C: To create a Batch pool in Python, the app uses the PoolAddParameter class to set the number of nodes, VM size, and a pool configuration.References:https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool.

Note:

Step 1: Create a pool of compute nodes. When you create a pool, you specify the number of compute nodes for the pool, their size, and the operating system. When each task in your job runs, it's assigned to execute on one of the nodes in your pool.

Step 2 : Create a job. A job manages a collection of tasks. You associate each job to a specific pool where that job's tasks will run.

Step 3: Add tasks to the job. Each task runs the application or script that you uploaded to process the data files it downloads from your Storage account. As each task completes, it can upload its output to Azure Storage.

Incorrect Answers:

C: To create a Batch pool in Python, the app uses the PoolAddParameter class to set the number of nodes, VM size, and a pool configuration.

References:

https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet

Question 4

You are developing a software solution for an autonomous transportation system. The solution uses large data sets and Azure Batch processing to simulate navigation sets for entire fleets of vehicles.

You need to create compute nodes for the solution on Azure Batch.

What should you do?

- In the Azure portal, create a Batch account.

- In a .NET method, call the method: BatchClient.PoolOperations.CreatePool

- In Python, implement the class: JobAddParameter

- In Python, implement the class: TaskAddParameter

Correct answer: B

Explanation:

A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool. Incorrect Answers:C, D: To create a Batch pool in Python, the app uses the PoolAddParameter class to set the number of nodes, VM size, and a pool configuration.References:https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnethttps://docs.microsoft.com/en-us/azure/batch/quick-run-python A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool.

Incorrect Answers:

C, D: To create a Batch pool in Python, the app uses the PoolAddParameter class to set the number of nodes, VM size, and a pool configuration.

References:

https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet

https://docs.microsoft.com/en-us/azure/batch/quick-run-python

Question 5

You have an Azure Batch project that processes and converts files and stores the files in Azure storage. You are developing a function to start the batch job.

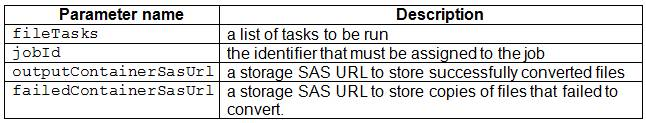

You add the following parameters to the function.

You must ensure that converted files are placed in the container referenced by the outputContainerSasUrl parameter. Files which fail to convert are placed in the container referenced by the failedContainerSasUrl parameter.

You need to ensure the files are correctly processed.

How should you complete the code segment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Correct answer: To work with this question, an Exam Simulator is required.

Explanation:

Box 1: CreateJobBox 2: TaskSuccessTaskSuccess: Upload the file(s) only after the task process exits with an exit code of 0.Incorrect: TaskCompletion: Upload the file(s) after the task process exits, no matter what the exit code was.Box 3: TaskFailure TaskFailure:Upload the file(s) only after the task process exits with a nonzero exit code.Box 4: OutputFilesTo specify output files for a task, create a collection of OutputFile objects and assign it to the CloudTask.OutputFiles property when you create the task. References:https://docs.microsoft.com/en-us/dotnet/api/microsoft.azure.batch.protocol.models.outputfileuploadconditionhttps://docs.microsoft.com/en-us/azure/batch/batch-task-output-files Box 1: CreateJob

Box 2: TaskSuccess

TaskSuccess: Upload the file(s) only after the task process exits with an exit code of 0.

Incorrect: TaskCompletion: Upload the file(s) after the task process exits, no matter what the exit code was.

Box 3: TaskFailure

TaskFailure:Upload the file(s) only after the task process exits with a nonzero exit code.

Box 4: OutputFiles

To specify output files for a task, create a collection of OutputFile objects and assign it to the CloudTask.OutputFiles property when you create the task.

References:

https://docs.microsoft.com/en-us/dotnet/api/microsoft.azure.batch.protocol.models.outputfileuploadcondition

https://docs.microsoft.com/en-us/azure/batch/batch-task-output-files

Question 6

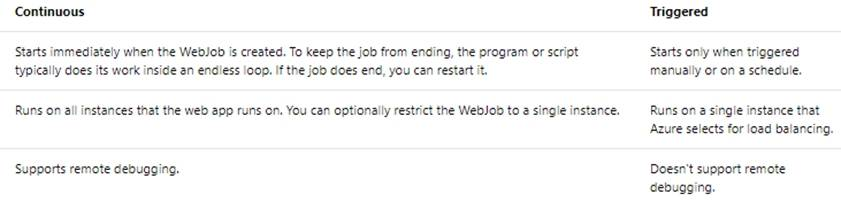

You are developing Azure WebJobs.

You need to recommend a WebJob type for each scenario.

Which WebJob type should you recommend? To answer, drag the appropriate WebJob types to the correct scenarios. Each WebJob type may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Correct answer: To work with this question, an Exam Simulator is required.

Explanation:

Box 1: ContinuousContinuous runs on all instances that the web app runs on. You can optionally restrict the WebJob to a single instance. Box 2: TriggeredTriggered runs on a single instance that Azure selects for load balancing. Box 3: ContinuousContinuous supports remote debugging. Note: The following table describes the differences between continuous and triggered WebJobs. References:https://docs.microsoft.com/en-us/azure/app-service/web-sites-create-web-jobs Box 1: Continuous

Continuous runs on all instances that the web app runs on. You can optionally restrict the WebJob to a single instance.

Box 2: Triggered

Triggered runs on a single instance that Azure selects for load balancing.

Box 3: Continuous

Continuous supports remote debugging.

Note:

The following table describes the differences between continuous and triggered WebJobs.

References:

https://docs.microsoft.com/en-us/azure/app-service/web-sites-create-web-jobs

Question 7

You are deploying an Azure Kubernetes Services (AKS) cluster that will use multiple containers.

You need to create the cluster and verify that the services for the containers are configured correctly and available.

Which four commands should you use to develop the solution? To answer, move the appropriate command segments from the list of command segments to the answer area and arrange them in the correct order.

Correct answer: To work with this question, an Exam Simulator is required.

Explanation:

Step 1: az group createCreate a resource group with the az group create command. An Azure resource group is a logical group in which Azure resources are deployed and managed. Example: The following example creates a resource group named myAKSCluster in the eastus location.az group create --name myAKSCluster --location eastus Step 2 : az aks createUse the az aks create command to create an AKS cluster. Step 3: kubectl applyTo deploy your application, use the kubectl apply command. This command parses the manifest file and creates the defined Kubernetes objects. Step 4: az aks get-credentialsConfigure it with the credentials for the new AKS cluster. Example: az aks get-credentials --name aks-cluster --resource-group aks-resource-group References:https://docs.bitnami.com/azure/get-started-aks/ Step 1: az group create

Create a resource group with the az group create command. An Azure resource group is a logical group in which Azure resources are deployed and managed.

Example: The following example creates a resource group named myAKSCluster in the eastus location.

az group create --name myAKSCluster --location eastus

Step 2 : az aks create

Use the az aks create command to create an AKS cluster.

Step 3: kubectl apply

To deploy your application, use the kubectl apply command. This command parses the manifest file and creates the defined Kubernetes objects.

Step 4: az aks get-credentials

Configure it with the credentials for the new AKS cluster. Example:

az aks get-credentials --name aks-cluster --resource-group aks-resource-group

References:

https://docs.bitnami.com/azure/get-started-aks/

Question 8

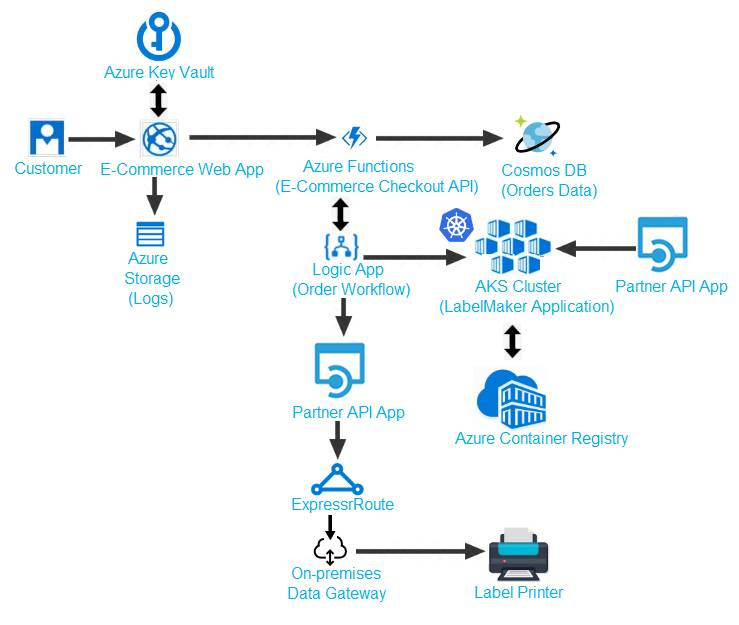

You need to implement the e-commerce checkout API.

Which three actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Set the function template’s Mode property to Webhook and the Webhook type property to Generic JSON.

- Create an Azure Function using the HTTP POST function template.

- In the Azure Function App, enable Cross-Origin Resource Sharing (CORS) with all origins permitted.

- In the Azure Function App, enable Managed Service Identity (MSI).

- Set the function template’s Mode property to Webhook and the Webhook type property to GitHub.

- Create an Azure Function using the Generic webhook function template.

Correct answer: ABD

Explanation:

Scenario: E-commerce application sign-ins must be secured by using Azure App Service authentication and Azure Active Directory (AAD).D: A managed identity from Azure Active Directory allows your app to easily access other AAD-protected resources such as Azure Key Vault. Incorrect Answers:C: CORS is an HTTP feature that enables a web application running under one domain to access resources in another domain.References:https://docs.microsoft.com/en-us/azure/app-service/overview-managed-identity Scenario: E-commerce application sign-ins must be secured by using Azure App Service authentication and Azure Active Directory (AAD).

D: A managed identity from Azure Active Directory allows your app to easily access other AAD-protected resources such as Azure Key Vault.

Incorrect Answers:

C: CORS is an HTTP feature that enables a web application running under one domain to access resources in another domain.

References:

https://docs.microsoft.com/en-us/azure/app-service/overview-managed-identity

Question 9

You need to provision and deploy the order workflow.

Which three components should you include? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Connections

- On-premises Data Gateway

- Workflow definition

- Resources

- Functions

Correct answer: BCE

Explanation:

Scenario: The order workflow fails to run upon initial deployment to Azure. Scenario: The order workflow fails to run upon initial deployment to Azure.

Question 10

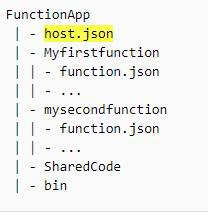

You develop a serverless application using several Azure Functions. These functions connect to data from within the code.

You want to configure tracing for an Azure Function App project.

You need to change configuration settings in the host.json file.

Which tool should you use?

- Visual Studio

- Azure portal

- Azure PowerShell

- Azure Functions Core Tools (Azure CLI)

Correct answer: B

Explanation:

The function editor built into the Azure portal lets you update the function.json file and the code file for a function. The host.json file, which contains some runtime-specific configurations, is in the root folder of the function app. References:https://docs.microsoft.com/en-us/azure/azure-functions/functions-reference#fileupdate The function editor built into the Azure portal lets you update the function.json file and the code file for a function. The host.json file, which contains some runtime-specific configurations, is in the root folder of the function app.

References:

https://docs.microsoft.com/en-us/azure/azure-functions/functions-reference#fileupdate

HOW TO OPEN VCE FILES

Use VCE Exam Simulator to open VCE files

HOW TO OPEN VCEX AND EXAM FILES

Use ProfExam Simulator to open VCEX and EXAM files

ProfExam at a 20% markdown

You have the opportunity to purchase ProfExam at a 20% reduced price

Get Now!