Download Certified Kubernetes Application Developer.CKAD.VCEplus.2022-10-14.33q.vcex

| Vendor: | Linux Foundation |

| Exam Code: | CKAD |

| Exam Name: | Certified Kubernetes Application Developer |

| Date: | Oct 14, 2022 |

| File Size: | 21 MB |

| Downloads: | 3 |

How to open VCEX files?

Files with VCEX extension can be opened by ProfExam Simulator.

Discount: 20%

Demo Questions

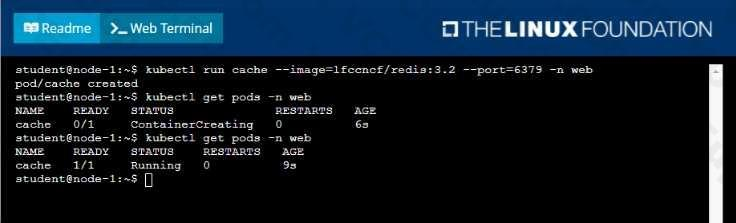

Question 1

Context

A web application requires a specific version of redis to be used as a cache.

Task

Create a pod with the following characteristics, and leave it running when complete:

- The pod must run in the web namespace.

- The namespace has already been created

- The name of the pod should be cache

- Use the Ifccncf/redis image with the 3.2 tag

- Expose port 6379

- See the solution below.

Correct answer: A

Explanation:

Solution: Solution:

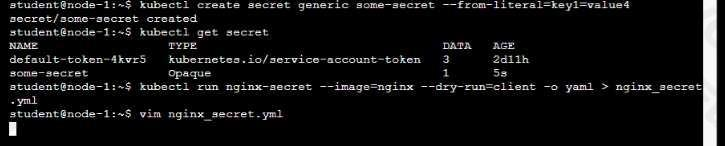

Question 2

Context

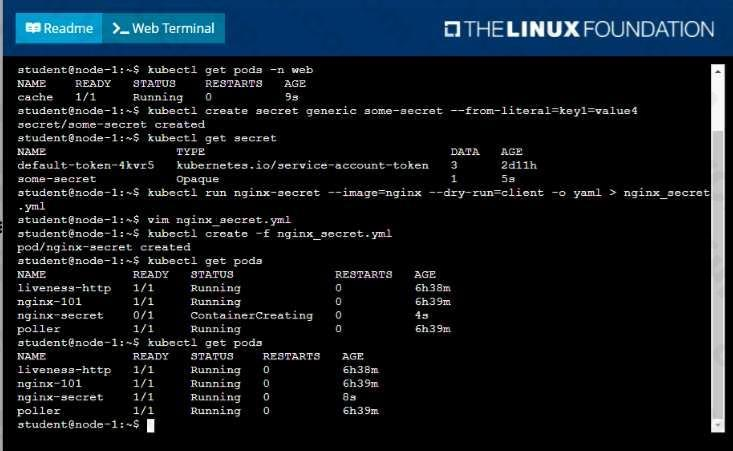

You are tasked to create a secret and consume the secret in a pod using environment variables as follow:

Task

- Create a secret named another-secret with a key/value pair; key1/value4

- Start an nginx pod named nginx-secret using container image nginx, and add an environment variable exposing the value of the secret key key 1, using COOL_VARIABLE as the name for the environment variable inside the pod

- See the solution below.

Correct answer: A

Explanation:

Solution: Solution:

Question 3

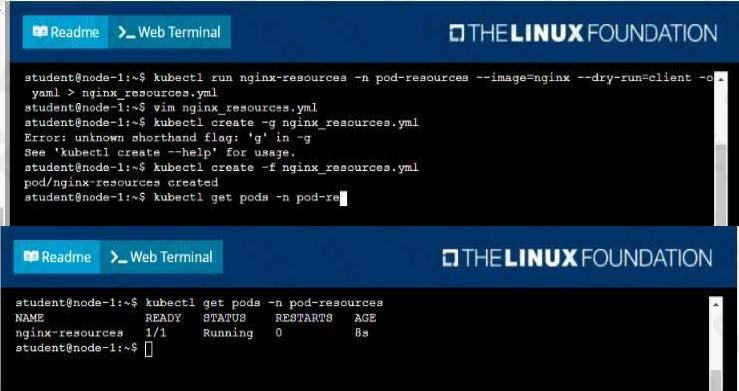

Context

Task

You are required to create a pod that requests a certain amount of CPU and memory, so it gets scheduled to-a node that has those resources available.

- Create a pod named nginx-resources in the pod-resources namespace that requests a minimum of 200m CPU and 1Gi memory for its container

- The pod should use the nginx image

- The pod-resources namespace has already been created

- See the solution below.

Correct answer: A

Explanation:

Solution: Solution:

Question 4

Context

Context

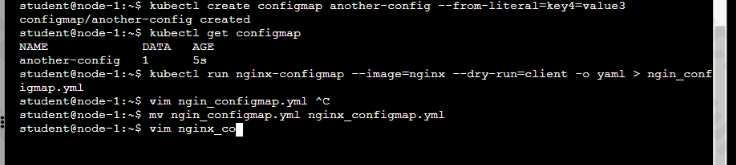

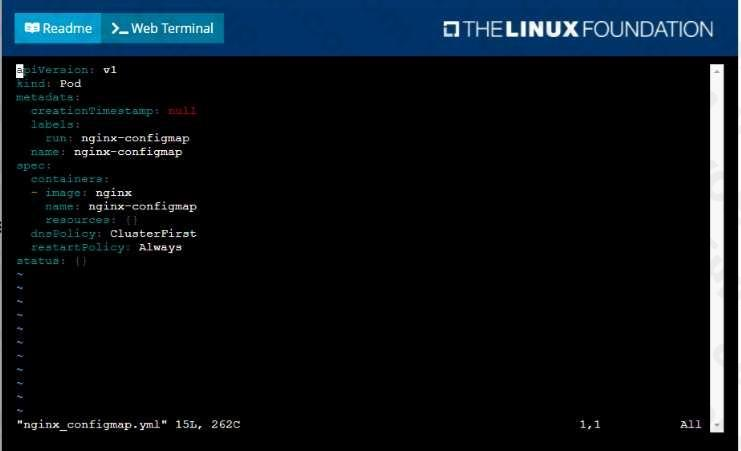

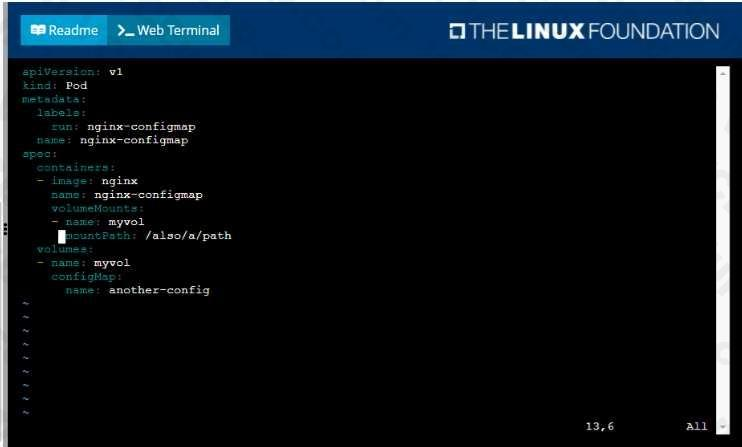

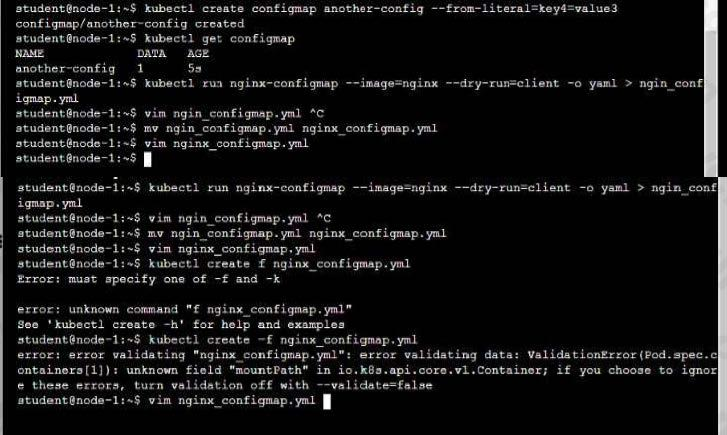

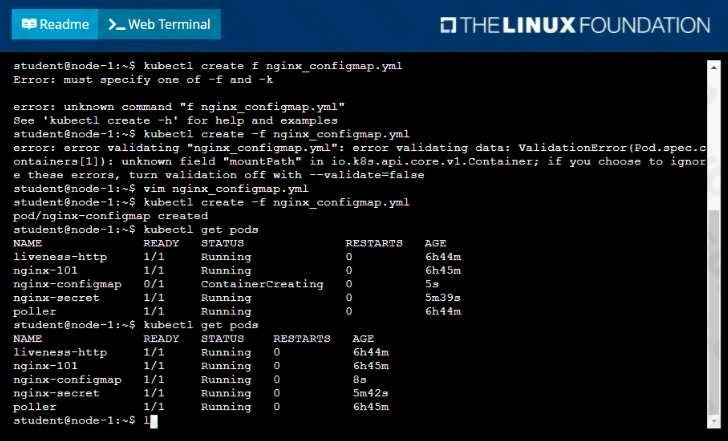

You are tasked to create a ConfigMap and consume the ConfigMap in a pod using a volume mount.

Task

Please complete the following:

- Create a ConfigMap named another-config containing the key/value pair: key4/value3

- start a pod named nginx-configmap containing a single container using the nginx image, and mount the key you just created into the pod under directory /also/a/path

- See the solution below.

Correct answer: A

Explanation:

Solution: Solution:

Question 5

Context

Context

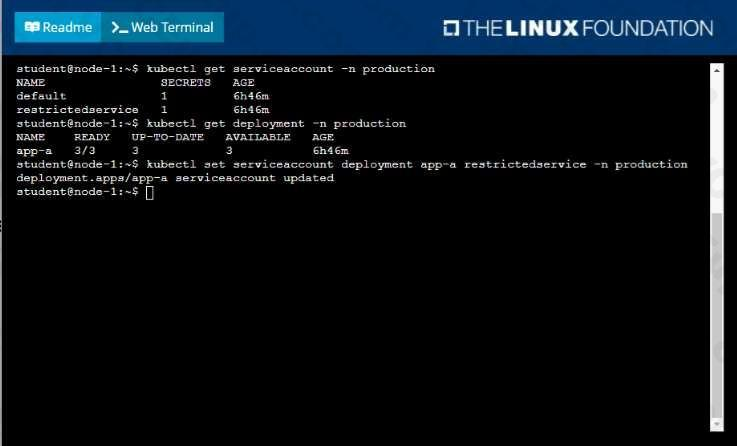

Your application's namespace requires a specific service account to be used.

Task

Update the app-a deployment in the production namespace to run as the restrictedservice service account. The service account has already been created.

- See the solution below.

Correct answer: A

Explanation:

Solution: Solution:

Question 6

Context

Context

A pod is running on the cluster but it is not responding.

Task

The desired behavior is to have Kubemetes restart the pod when an endpoint returns an HTTP 500 onthe /healthz endpoint. The service, probe-pod, should never send traffic to the pod while it is failing.

Please complete the following:

- The application has an endpoint, /started, that will indicate if it can accept traffic by returning an HTTP 200. If the endpoint returns an HTTP 500, the application has not yet finished initialization.

- The application has another endpoint /healthz that will indicate if the application is still working as expected by returning an HTTP 200. If the endpoint returns an HTTP 500 the application is no longer responsive.

- Configure the probe-pod pod provided to use these endpoints

- The probes should use port 8080

- See the solution below.

Correct answer: A

Explanation:

Solution:apiVersion: v1kind: Podmetadata:labels:test: livenessname: liveness-execspec:containers:- name: livenessimage: k8s.gcr.io/busyboxargs:/bin/sh- -c- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600livenessProbe:exec:command:- cat- /tmp/healthyinitialDelaySeconds: 5periodSeconds: 5The initialDelaySeconds field tells the kubelet that it should wait 5 seconds before performing the first probe. To perform a probe, the kubelet executes the command cat /tmp/healthy in the target container. If the command succeeds, it returns 0, and the kubelet considers the container to be alive and healthy. If the command returns a non-zero value, the kubelet kills the container and restarts it.When the container starts, it executes this command:/bin/sh -c "touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600"For the first 30 seconds of the container's life, there is a /tmp/healthy file. So during the first 30 seconds, the command cat /tmp/healthy returns a success code. After 30 seconds, cat/tmp/healthy returns a failure code.Create the Pod:kubectl apply -f https://k8s.io/examples/pods/probe/exec-liveness.yamlWithin 30 seconds, view the Pod events:kubectl describe pod liveness-exec The output indicates that no liveness probes have failed yet:FirstSeen LastSeen Count From SubobjectPath Type Reason Message--------- -------- ----- ---- ------------- -------- ------ ------- 24s 24s 1 {default-scheduler } Normal Scheduled Successfully assigned liveness-exec to worker023s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Pulling pulling image "k8s.gcr.io/busybox"23s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Pulled Successfully pulled image "k8s.gcr.io/busybox"23s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Created Created container with docker id 86849c15382e; Security:[seccomp=unconfined]23s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Started Started container with docker id 86849c15382eAfter 35 seconds, view the Pod events again:kubectl describe pod liveness-execAt the bottom of the output, there are messages indicating that the liveness probes have failed, and the containers have been killed and recreated.FirstSeen LastSeen Count From SubobjectPath Type Reason Message--------- -------- ----- ---- ------------- -------- ------ -------37s 37s 1 {default-scheduler } Normal Scheduled Successfully assigned livenessexec to worker036s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Pulling pulling image "k8s.gcr.io/busybox"36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Pulled Successfully pulled image "k8s.gcr.io/busybox"36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Created Created container with docker id 86849c15382e; Security:[seccomp=unconfined]36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Started Started container with docker id 86849c15382e2s 2s 1 {kubelet worker0} spec.containers{liveness} Warning Unhealthy Liveness probe failed: cat: can't open '/tmp/healthy': No such file or directoryWait another 30 seconds, and verify that the container has been restarted:kubectl get pod liveness-execThe output shows that RESTARTS has been incremented:NAME READY STATUS RESTARTS AGEliveness-exec 1/1 Running 1 1m Solution:

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness-exec

spec:

containers:

- name: liveness

image: k8s.gcr.io/busybox

args:

/bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

The initialDelaySeconds field tells the kubelet that it should wait 5 seconds before performing the first probe. To perform a probe, the kubelet executes the command cat /tmp/healthy in the target container. If the command succeeds, it returns 0, and the kubelet considers the container to be alive and healthy. If the command returns a non-zero value, the kubelet kills the container and restarts it.

When the container starts, it executes this command:

/bin/sh -c "touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600"

For the first 30 seconds of the container's life, there is a /tmp/healthy file. So during the first 30 seconds, the command cat /tmp/healthy returns a success code. After 30 seconds, cat

/tmp/healthy returns a failure code.

Create the Pod:

kubectl apply -f https://k8s.io/examples/pods/probe/exec-liveness.yaml

Within 30 seconds, view the Pod events:

kubectl describe pod liveness-exec The output indicates that no liveness probes have failed yet:

FirstSeen LastSeen Count From SubobjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

24s 24s 1 {default-scheduler } Normal Scheduled Successfully assigned liveness-exec to worker0

23s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Pulling pulling image "k8s.gcr.io/busybox"

23s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Pulled Successfully pulled image "k8s.gcr.io/busybox"

23s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Created Created container with docker id 86849c15382e; Security:[seccomp=unconfined]

23s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Started Started container with docker id 86849c15382e

After 35 seconds, view the Pod events again:

kubectl describe pod liveness-exec

At the bottom of the output, there are messages indicating that the liveness probes have failed, and the containers have been killed and recreated.

FirstSeen LastSeen Count From SubobjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

37s 37s 1 {default-scheduler } Normal Scheduled Successfully assigned livenessexec to worker0

36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Pulling pulling image "k8s.gcr.io/busybox"

36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Pulled Successfully pulled image "k8s.gcr.io/busybox"

36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Created Created container with docker id 86849c15382e; Security:[seccomp=unconfined]

36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Started Started container with docker id 86849c15382e

2s 2s 1 {kubelet worker0} spec.containers{liveness} Warning Unhealthy Liveness probe failed: cat: can't open '/tmp/healthy': No such file or directory

Wait another 30 seconds, and verify that the container has been restarted:

kubectl get pod liveness-exec

The output shows that RESTARTS has been incremented:

NAME READY STATUS RESTARTS AGE

liveness-exec 1/1 Running 1 1m

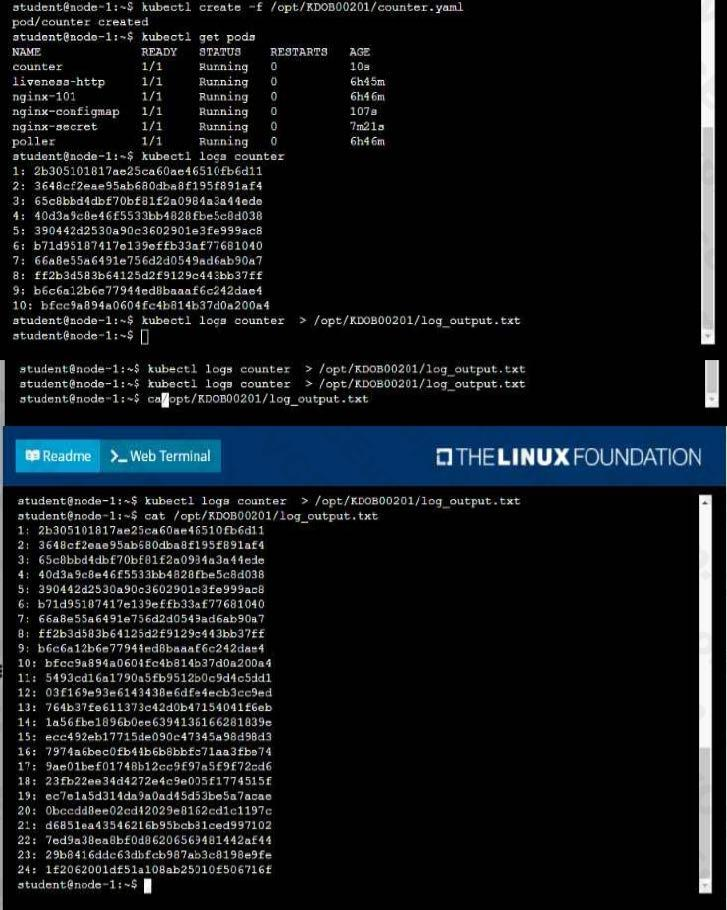

Question 7

Context

Context

You sometimes need to observe a pod's logs, and write those logs to a file for further analysis.

Task

Please complete the following;

- Deploy the counter pod to the cluster using the provided YAMLspec file at/opt/KDOB00201/counter.yaml

- Retrieve all currently available application logs from the running pod and store them in the file/opt/KDOB0020l/log_Output.txt, which has already been created

- See the solution below.

Correct answer: A

Explanation:

Solution: Solution:

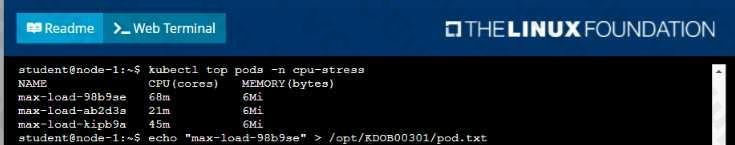

Question 8

Context

Context

It is always useful to look at the resources your applications are consuming in a cluster.

Task

- From the pods running in namespace cpu-stress , write the name only of the pod that is consuming the most CPU to file /opt/KDOBG030l/pod.txt, which has already been created.

- See the solution below.

Correct answer: A

Explanation:

Solution: Solution:

Question 9

Context

Anytime a team needs to run a container on Kubernetes they will need to define a pod within which to run the container.

Task

Please complete the following:

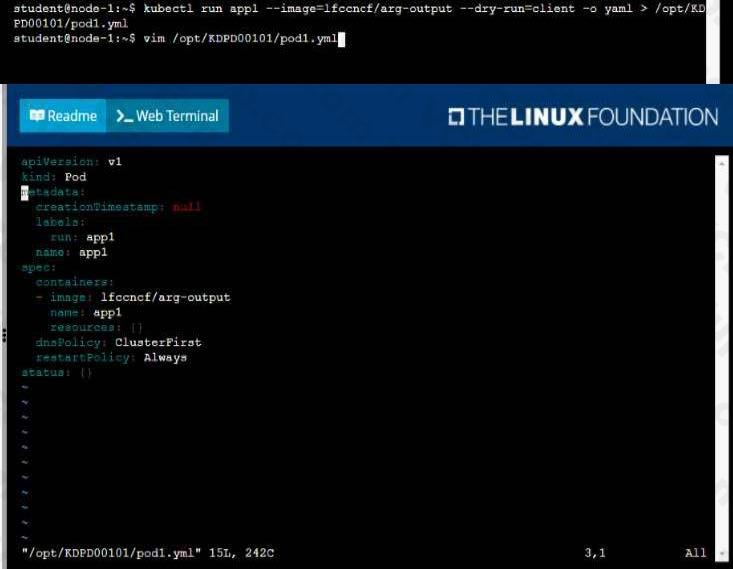

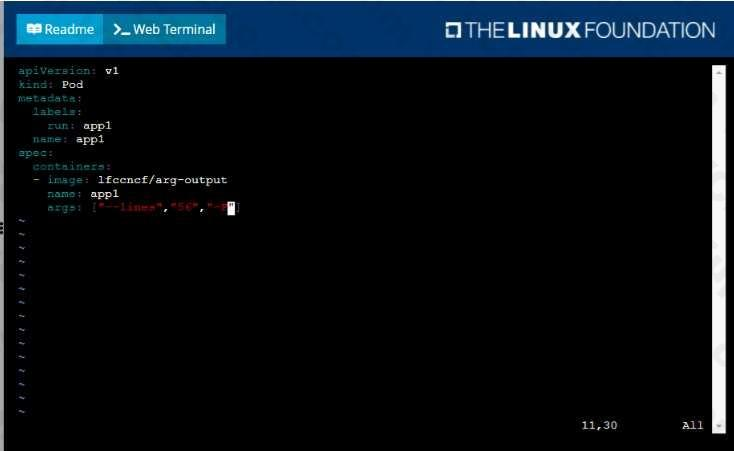

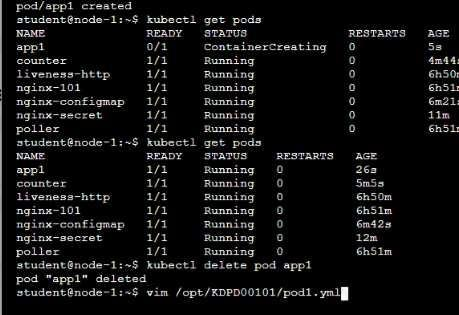

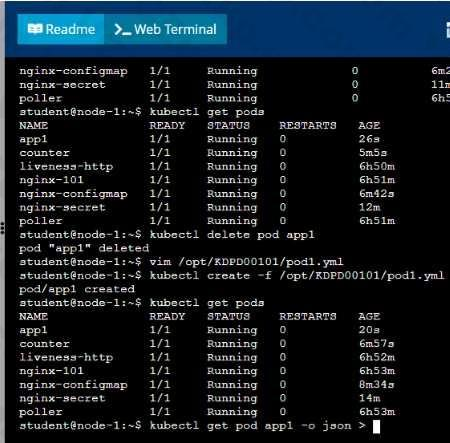

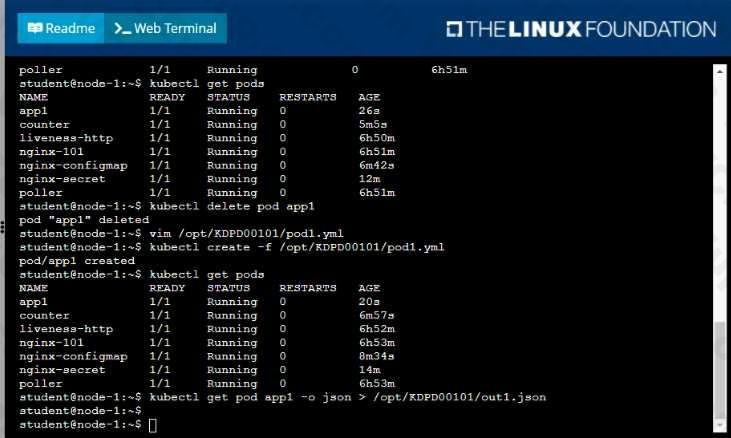

- Create a YAML formatted pod manifest/opt/KDPD00101/podl.yml to create a pod named app1 that runs a container named app1cont using image Ifccncf/arg-output with these command line arguments: -lines 56 -F

- Create the pod with the kubect1 command using the YAML file created in the previous step

- When the pod is running display summary data about the pod in JSON format using the kubect1 command and redirect the output to a file named /opt/KDPD00101/out1.json

- All of the files you need to work with have been created, empty, for your convenience

- See the solution below.

Correct answer: A

Explanation:

Solution: Solution:

Question 10

Context

Task

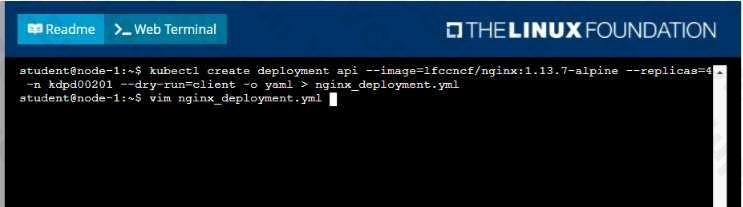

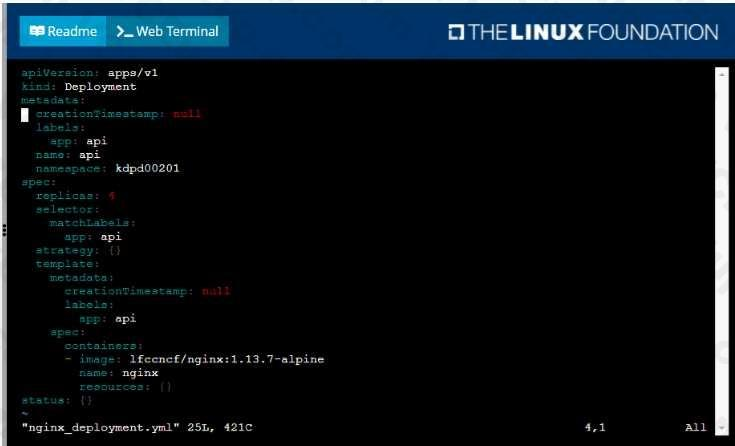

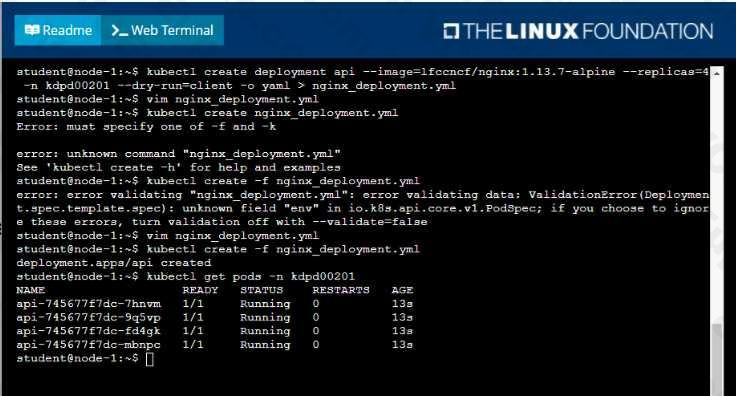

Create a new deployment for running.nginx with the following parameters;

- Run the deployment in the kdpd00201 namespace. The namespace has already been created

- Name the deployment frontend and configure with 4 replicas

- Configure the pod with a container image of lfccncf/nginx:1.13.7

- Set an environment variable of NGINX__PORT=8080 and also expose that port for the container above

- See the solution below.

Correct answer: A

Explanation:

Solution: Solution:

HOW TO OPEN VCE FILES

Use VCE Exam Simulator to open VCE files

HOW TO OPEN VCEX AND EXAM FILES

Use ProfExam Simulator to open VCEX and EXAM files

ProfExam at a 20% markdown

You have the opportunity to purchase ProfExam at a 20% reduced price

Get Now!